I have tried several ways to get the .Net Framework 3.5 in stalled on Server 2012 however the only way that ever works every time and is supper fast is powershell.

On the Server 2012 media is a folder called sources\sxs so from an elevated command window I run

dism /online /enable-feature /feature:netfx3 /all /limitaccess /source:f:\sources\sxs as per the screenshot below. F:\SOURCES\SXS is just whereever you have the SXS folder located.

Now I was working on a project with installs around the world and it was proving hard to get the media to all the different sites and I was wondering if copying just the .net3.5 cabs from that SXS folder would do the job as its only 18Mb but alas it didn't work and I had to go back to the full folder. What I now do is to load the SXS on a network share and just use the network path in a powershell script.

This blog is about my ramblings and findings working with System Center 2012

Search This Blog

Monday, May 20, 2013

SQL Permissions Error on setup (The SQL service account login and password is not valid)

I was installing several SQL instances for a SCCM pre-prod environment that was going to mimic a production build but on a much smaller basis. I was using a specific AD account and it had to span 3 domains in the same forest. In the first domain (where the account existed) I had no issues. Once I went to the other 2 domains I kept getting the same 2 errors, namely

"The SQL Server service account login or password is not valid"

Or

"The credentials you provided for the Reporting Service are invalid"

I make as many mistakes as the next so I wrote down the username and password on notepad and then opened power shell as that user (so I deffo knew I was using the correct username and password)

I am crap at reading past error messages but the second error caught my eye

Now you can see that I have all the services changed to domain accounts, except for the Reporting Service

To change the SSRS you need to logon to the SSRS Configuration Manager, before we can change the account to a domain account we need to backup the encryption key.

Now when I look at the SSRS page there is a domain account and not a built in account

There are 2 last points here, if you are installing SCCM you must use network accounts you cannot use the build in SQL accounts and if you are running these services under a domain account then you will need to register the SPN.

"The SQL Server service account login or password is not valid"

Or

"The credentials you provided for the Reporting Service are invalid"

I make as many mistakes as the next so I wrote down the username and password on notepad and then opened power shell as that user (so I deffo knew I was using the correct username and password)

I am crap at reading past error messages but the second error caught my eye

So it is telling me here that if I have an issue with the domain account I can go and change that in SQL Configuration Manager. So I can perform my install with the standard built-in accounts and then change it when the install is done. But the really important thing here is that you can't go to services.msc and change the account that any of the SQL services run under as this will not allow SQL to properly configure the services. One of the things that the SQL configuration does is grant the user the right to logon as a service. (that might have been my issue but it still does not make sense when SQL configuration manager can make the changes)

When you jump onto SQL Configuration services and select the SQL services and change the user account from the built in account to the domain account you want.

Now you can see that I have all the services changed to domain accounts, except for the Reporting Service

To change the SSRS you need to logon to the SSRS Configuration Manager, before we can change the account to a domain account we need to backup the encryption key.

Now when I look at the SSRS page there is a domain account and not a built in account

And the same can be seen on the SQL configuration manager page

To CAS or not to CAS....

I have been designing and deploying configuration manager for 12 years. In recent years I have been lucky enough to be working on some large scale SCCM projects. So what is large scale to me? over 40,000 seats goes into the "large scale" category for me. The CAS is nothing new to SCCM in 2007 we had the idea of the central admin site and in many ways it acted in the same way as the CAS. The CAS or Central Admin Server is basically a central admin location where you can manager multiple primary sites in one console.

When SCCM 2012 was released the CAS had to be installed during the initial install phase and as a result a lot of people deployed a CAS as a just in case design. The CAS then received a lot of negative press because it implemented a layer of complexity that many didn't understand. I am going to cover the physical things to do when deploying a CAS in another blog but in this blog we are just going to look at some of the design decisions you may go through when choosing to deploy a CAS.

A single Primary Site (PS) can support up to 100k clients and I have never deployed a SCCM environment with 100k seats but have installed a CAS 3 times so was I wrong to do that? So let me give you a profile of 1 install. The customer was a retail customer with a total of about 80k seats. The client was expecting about 5% growth over the next 3 years and they have a support goal of never going past 80% of the Microsoft supportable limit and so we needed a CAS to support more than 1 PS.

My next install was for 45k and that is well within the 100k limit with a growth rate of about 15% over the next 3 years, with this deployment we still went for a CAS and 3 PS servers. So if the CAS includes more complexity and more databases and servers then why chose a CAS?

It came down to the following points;

If I have a single PS server supporting say 50k clients and it goes down then we can add no new software, clients or OS images. No new policies can sent to the clients and this means its a pretty high single point of failure for a large org.

In this example we have 1 CAS and 3 PS, the 3 PS were in the US, EU and APAC. With this design we could loose the CAS and 2 PS servers and still have no issues with the last PS working.

There is no doubt that with the changes to SCCM SP1 and the ability to add a CAS after installing the first PS that for smaller sites its going to be more likely that more smaller sites will not go for a CAS to begin with unless you really need one, and who should decide that ... well you.

In SP1 there are also some good changes to how we can view what is happening from a site replication standpoint from within the monitoring component.

When SCCM 2012 was released the CAS had to be installed during the initial install phase and as a result a lot of people deployed a CAS as a just in case design. The CAS then received a lot of negative press because it implemented a layer of complexity that many didn't understand. I am going to cover the physical things to do when deploying a CAS in another blog but in this blog we are just going to look at some of the design decisions you may go through when choosing to deploy a CAS.

A single Primary Site (PS) can support up to 100k clients and I have never deployed a SCCM environment with 100k seats but have installed a CAS 3 times so was I wrong to do that? So let me give you a profile of 1 install. The customer was a retail customer with a total of about 80k seats. The client was expecting about 5% growth over the next 3 years and they have a support goal of never going past 80% of the Microsoft supportable limit and so we needed a CAS to support more than 1 PS.

My next install was for 45k and that is well within the 100k limit with a growth rate of about 15% over the next 3 years, with this deployment we still went for a CAS and 3 PS servers. So if the CAS includes more complexity and more databases and servers then why chose a CAS?

It came down to the following points;

If I have a single PS server supporting say 50k clients and it goes down then we can add no new software, clients or OS images. No new policies can sent to the clients and this means its a pretty high single point of failure for a large org.

In this example we have 1 CAS and 3 PS, the 3 PS were in the US, EU and APAC. With this design we could loose the CAS and 2 PS servers and still have no issues with the last PS working.

There is no doubt that with the changes to SCCM SP1 and the ability to add a CAS after installing the first PS that for smaller sites its going to be more likely that more smaller sites will not go for a CAS to begin with unless you really need one, and who should decide that ... well you.

In SP1 there are also some good changes to how we can view what is happening from a site replication standpoint from within the monitoring component.

Wednesday, March 6, 2013

SCCM 2012 SP1 Pre-Stage Content on a DP

In this post we will look at how you can pre-stage content on a DP. As per all the other videos they need to be watched on the you tube setting of HD. as per the clip below

User Device affinity Video

This blog is a video blog on User Device Affinity in SCCM 2012. UDA is the ability to link a machine to a user. When we link the user account to the computer account we can target software to the user yet not have that software apply to every machine they log on to.

All of these videos are supposed to be watched on HD.

Tuesday, March 5, 2013

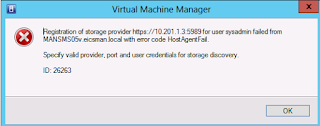

Host Agent Fail Error ID:26263 Adding new storage

In a LAB environment

its not that easy to get access to enterprise storage and as such it sometimes

takes a bit of trial and error to get the SMI-S provider working as we cant

practice it in a LAB. I was recently working

with a EMC VNX 5300 SAN and so here is what I did to get the provider

working. (I will add that I got a bit of

help from EMC support but I would not have got it going with the information on

TechNet)

No matter what I did I kept getting the following error when importing my storage. There was almost no information on the web except other people with the same error.

Lets firstly understand what is the point of this SMI-S provider.

Lets start off with

the SMI-S provider and what it does.

With a large disparate amount of storage manufactures all with different

ways to communicate with there storage devices it difficult for other vendors

to make products that can manage or monitor storage devices through one

interface. The SMI-S therefor is an

independent group that produce a vendor neutral solution for managing storage

providers. So how do they

communicate? Well when you install the

SMS-S provider it essentially creates a HTTP server that can parse XML so that

for example when an administrator in the VMM console requests a LUN for new

storage for a cloud then that request will be sent to the provider and in turn

it will translate that request into the creation of a new storage LUN on the

SAN. If you try and consider the impact

of that we can easily have a configuration whereby the VMM admin can control

Storage, Hypervisor and Network components in one place. That’s a really powerfully admin position in

terms of reducing the admin overhead .

Have a look at what Microsoft wrote about it on TechNet. http://blogs.technet.com/b/filecab/archive/2012/06/25/introduction-to-smi-s.aspx

Before we get into the storage add here is a diagram to explain in overview what you are supposed to be doing.

Firstly you will need to get the SMI-S provider

from EMC

The one I used was

se7507-Windows-x64-SMI

Then follow through with the install as shown below, but be sure and install the provider on something like one of your Hyper-V clusters and not on the VMM server. You can install it multiple times as its just essentially brokering a connection to your SAN and your SAN doesn't care it there is another one installed.

Once you have the

install done there are two command sets we need to run and they are all in

%Program Files\EMC, then they two areas you will need to go to are

For Authorization

%Program

Files\EMC\SYMCLI\bin

And to check the

deployment

%Program

Files\EMC\ECIM\ECOM\bin

I am assuming that

you are running this on Server 2012 and so you are going to need to open any

CMD secessions from an elevated command prompt. 10.201.0.83

Enter the command

that I have here replacing the host IP address with your own.

If you get the

command wrong it will just prompt you to let you know that you have entered the

command incorrectly as show below

Then

navigate to the %programfiles%\emc\ecim\ecom\bin and

enter the Disco command. Now its

important here to understand that there is a bit of a design fault with the way

EMC has designed this as the only account that works for setting up the

connection is the account that has full admin access to the storage

device. Lets hope in the near future

this is changed.

Now we

are going to change to the second folder set and verify the SMI-S

connection. In the CMD screen below if I

have not entered a value then I just accepted the defaults.

The last thing that

needs to be done now is enter "Disco" to discover your storage

provider. As you can see from the value

below 0 means you are connecting OK.

Now that we have the SMI-S provider talking to the SAN we can go to VMM and add in the storage array.

Now lets

go to VMM and get this storage in.

At a high

level here is what is needed

- Create a RunAs account

- Add the storage provider (its supper important to understand that it’s the host where you have installed the provider on and not you VMM server)

- Once the storage is added you can create LUN's

- The new LUN'S needed to be added to your clustered in VMM

To begin the process we need a RunAs account. I used my generic admin

account as per the steps above. As this is not a domain account you do

not want to validate the domain creds.

I added the storage by its network name, and to do this I had to add

that name to DNS, the protocol is SMI-S CIMXML and the port number is

what was specified in your provider test in the previous section, this

is usually 5988

The storage array gets discovered and you can verify that the provider is working OK.

I get a conformation of the storage addition and the provider address.

Now when I go to create the LUN I can do so from within VMM. In this case I am using thin provisioning on the LUN as there is now need to commit all the disk right from the start.

You can now go to the fabric in VMM and add the storage

You can now see that there are 3 LUNs being made available to the cluster.

Following these steps I was able to add the storage and manage the LUN through VMM. When I saw this all working the way its supposed I was really impressed with the working reality of this product.

Just one last note, there is a way to test the SMI-S provider and its a source forge download that you can get here.

Just one last note, there is a way to test the SMI-S provider and its a source forge download that you can get here.

PK

Saturday, March 2, 2013

Visual checklist for Windows Azure Services

Thinking

of installing Windows Azure Services for Windows Server or having some issues

with your deployment? Well here is a visual checklist to help you get the

deployment going successfully. The majority of the actual settings are focused

on the SPF install, the reason for this is that the portal really just front-ends the VMM requests so focus on how you have SPF installed will pay

off.

If you are still trying to get your head around the product then have a look at the overview post on Windows Azure Services

If you are still trying to get your head around the product then have a look at the overview post on Windows Azure Services

To begin with

before anything happens with Windows Azure Services you need to have VMM working

fully. So what does that mean?

- A cloud that is fully configured in terms of network, storage and Hypervisors

-

Be able to deploy VM's to a cloud (not a host, Windows Azure Services will only deploy to a cloud)

SPF needs to be

installed and tested, to test SPF enter the following 2 URL's into your

browser. In the two examples below I am using localhost so the test needs to be

done local. If you want to run this remotely then just enter the name of the SPF

server instead of localhost. If the result is successful you will see two XML

screens, examples of which are below.

https://localhost:8090/SC2012/VMM/microsoft.management.odata.svc/

https://localhost:8090/SC2012/Admin/microsoft.management.odata.svc/

The SPF VMM IIS Application Pool identity

running as domain user (not network service account)

Configure the SPF IIS web site to use Basic

Authentication

Create a local user on your SPF server, add it to the SPF local security groups

and then use that local user to register the Service Management Portal

The SQL instance that you are deploying to must have SQL authentication enabled

and the SPF Application Pool identity must

have admin access the SPF SQL DB and VMM

Just a note in terms of deploying this inside an enterprise environment is that

its not supported to use the portal with an AD user that is in the same the

domain as the VMM server. The point is that at the time of writing (March 2013)

the current version does not support an AD user so this is a moot point I

think.

Thanks to Marc Umeno for this great advice at the MVP summit.

Subscribe to:

Comments (Atom)